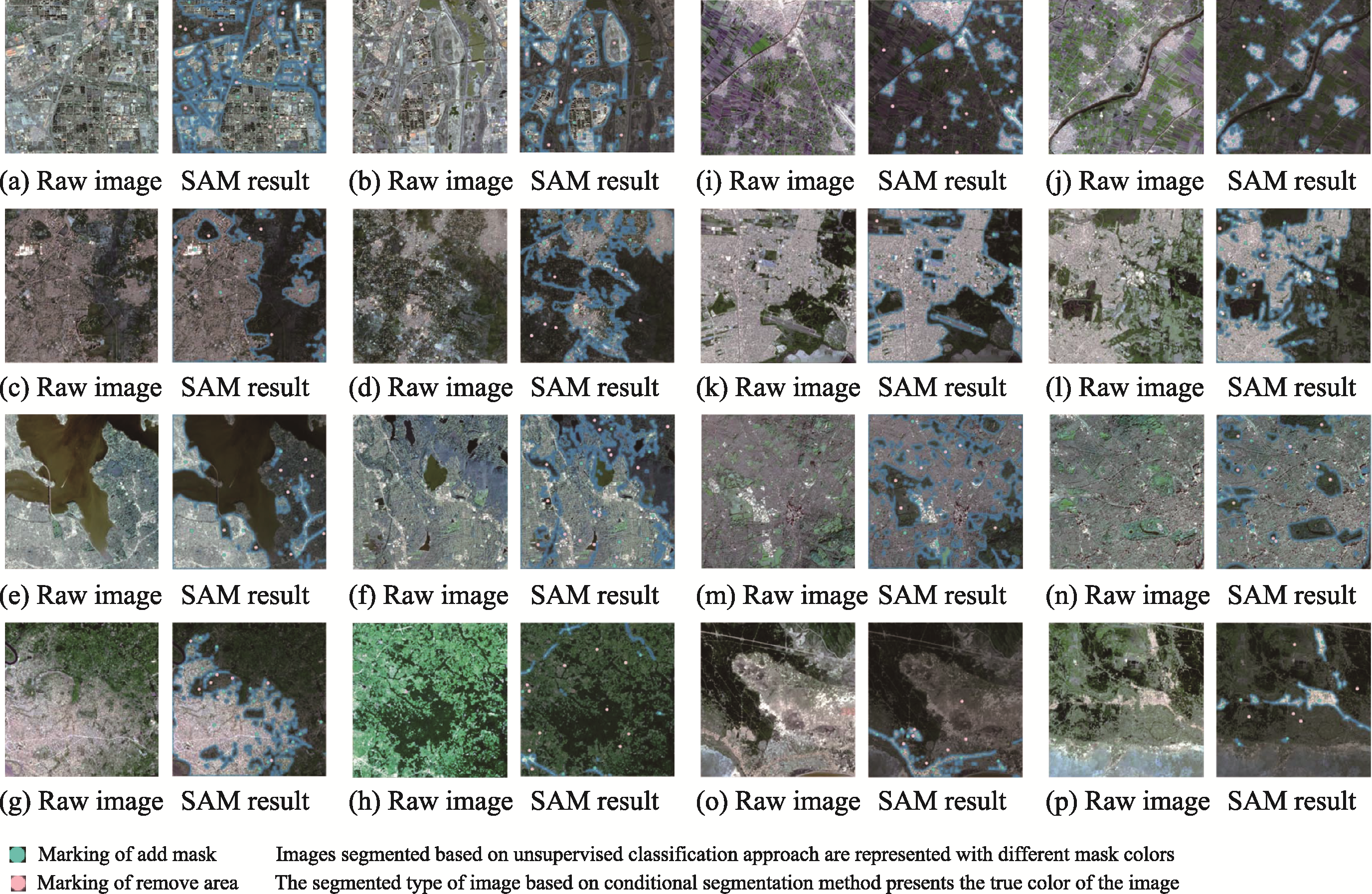

| Image ID | SAM | Image ID | SAM |

|---|---|---|---|

| 1 | 0.83 | 9 | 0.97 |

| 2 | 0.76 | 10 | 0.98 |

| 3 | 0.91 | 11 | 0.93 |

| 4 | 0.83 | 12 | 0.94 |

| 5 | 0.78 | 13 | 0.91 |

| 6 | 0.89 | 14 | 0.94 |

| 7 | 0.75 | 15 | 0.98 |

| 8 | 0.94 | 16 | 0.95 |

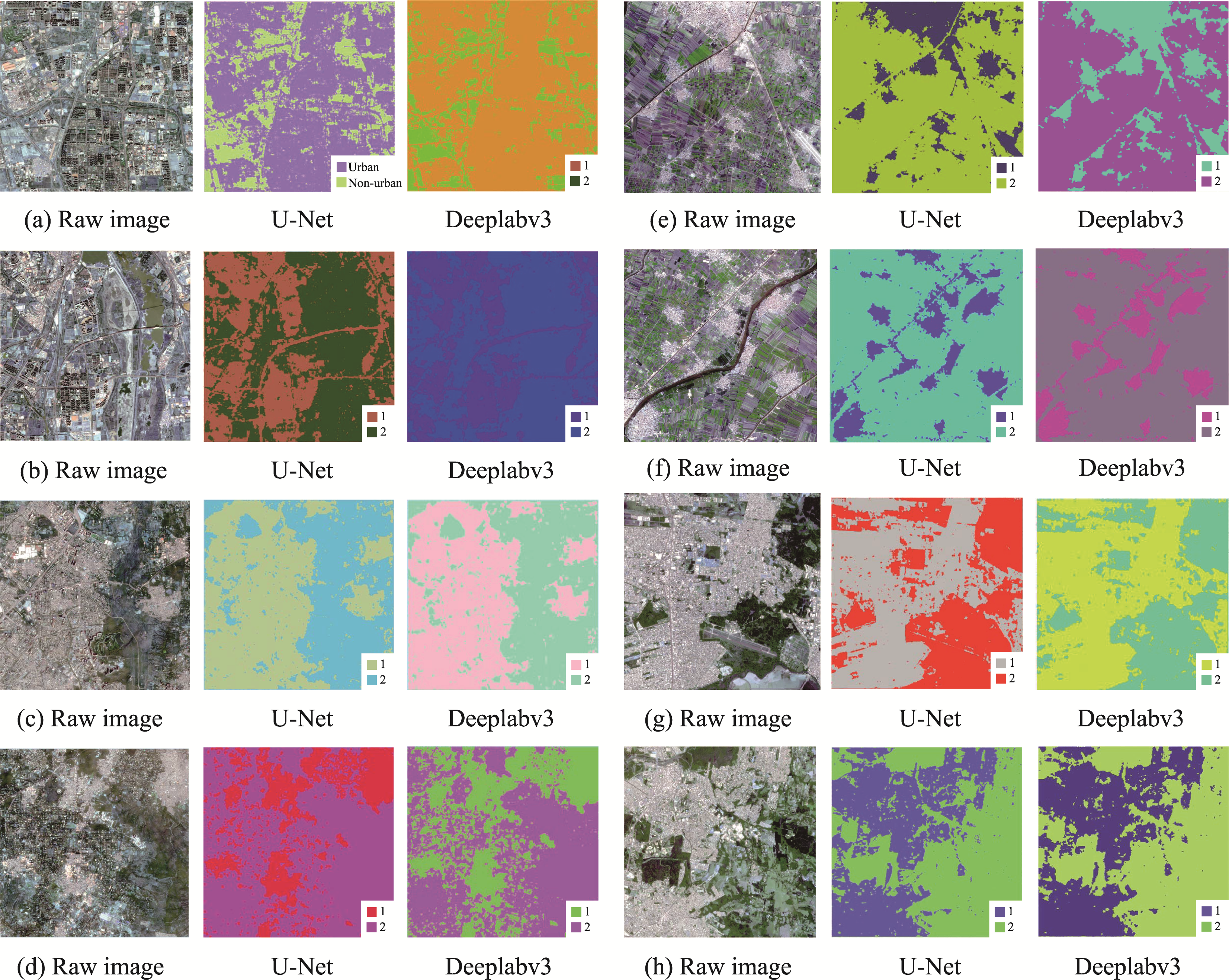

Figure 16 a to h images of UF extraction results based on two deep learning algorithms. Among them, Image a and b are from Beijing, China, c and d are from New Delhi, India, e and f are from Mansouria, Egypt, and g and h are from Porto Alegre, Brazil. In the legend, 1 represents urban areas and 2 represents non-urban areas.

Figure 16 a to h images of UF extraction results based on two deep learning algorithms. Among them, Image a and b are from Beijing, China, c and d are from New Delhi, India, e and f are from Mansouria, Egypt, and g and h are from Porto Alegre, Brazil. In the legend, 1 represents urban areas and 2 represents non-urban areas.

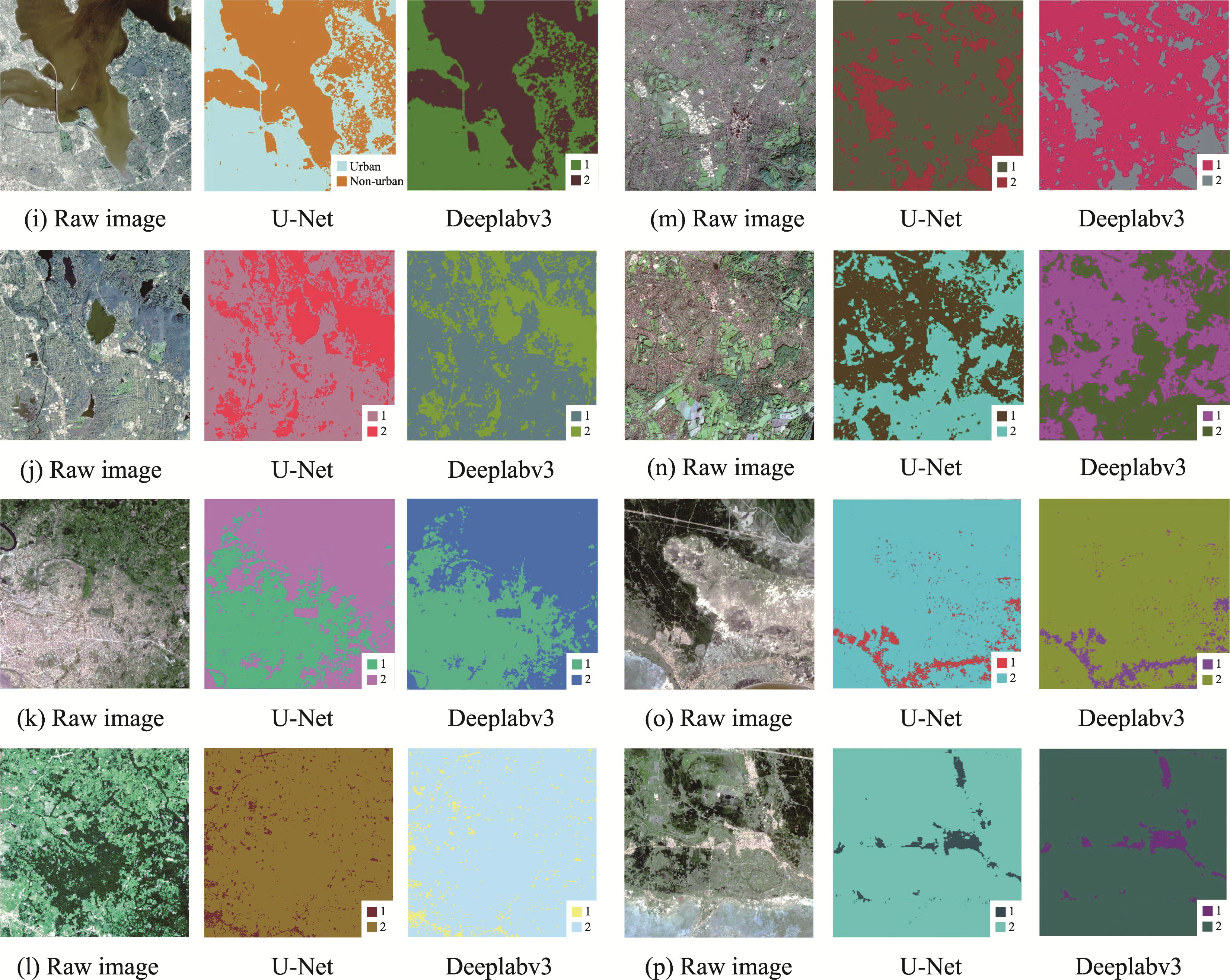

Figure 17 The results of UF extraction based on two deep learning algorithms, are presented for images i through p. Among them, Images i and j are from New York, USA, k and l from Kisangani, Sudan, m and n from London, UK, o and p from Phan Thiet, Vietnam. In the legend, 1 represents urban areas and 2 represents non-urban areas.

Figure 17 The results of UF extraction based on two deep learning algorithms, are presented for images i through p. Among them, Images i and j are from New York, USA, k and l from Kisangani, Sudan, m and n from London, UK, o and p from Phan Thiet, Vietnam. In the legend, 1 represents urban areas and 2 represents non-urban areas.

Figure 19 SAM-based UF extraction result diagrams, the extracted urban areas show the original colour that distinguishes them from non-urban areas, with a blue border distinguishing the two types of environments. Among them, Images a and b are from Beijing, China, c and d are from New Delhi, India, e and f are from Mansouria, Egypt, and g and h are from Porto Alegre, Brazil. In the legend, i and j from New York, USA, k and l from Kisangani, Sudan, m and n from London, UK, o and p from Phan Thiet, Vietnam.

Figure 19 SAM-based UF extraction result diagrams, the extracted urban areas show the original colour that distinguishes them from non-urban areas, with a blue border distinguishing the two types of environments. Among them, Images a and b are from Beijing, China, c and d are from New Delhi, India, e and f are from Mansouria, Egypt, and g and h are from Porto Alegre, Brazil. In the legend, i and j from New York, USA, k and l from Kisangani, Sudan, m and n from London, UK, o and p from Phan Thiet, Vietnam.